If Node.js is single-threaded, how does it handle high-concurrency I/O?

You’ve probably heard the pitch: "Node.js is fast because it’s non-blocking."

But then you look at the architecture. It has one thread. Just one. In a world of 16-core servers, that sounds ridiculous. How can a single thread handle 10,000 concurrent requests without catching fire?

I used to ask this question in interviews. I wasn't looking for a textbook definition of the Event Loop. I was looking for someone who understood the difference between orchestration and execution.

The answer lies in a clever deception. Node.js is single-threaded, but the system it runs on is not.

The "Project Manager" Analogy

Think of a traditional multi-threaded web server (like Apache or older Java implementations) as a bank with 50 tellers. If 51 customers show up, the 51st customer waits outside until a teller is free. It’s parallel, but it’s resource-heavy. Tellers are expensive.

Node.js is different. It’s a restaurant with one waiter (the Main Thread) and a massive kitchen staff (the System Kernel + Libuv).

- You (the Request) sit down and order a steak.

- The Waiter takes your order and yells it to the kitchen.

- The Waiter immediately goes to the next table. He doesn’t stand there watching the steak cook.

- When the steak is ready, the kitchen rings a bell (Event).

- The Waiter picks it up and brings it to you.

The Waiter is single-threaded. He can only do one thing at a time. But because he never waits, he can serve hundreds of tables while the kitchen does the heavy lifting in the background.

The Technical Reality: Network vs. Files

This is where the "Node is single-threaded" statement gets nuanced. The "kitchen" actually has two different sections, and they work differently.

1. True Async (Network I/O)

For network operations (like handling HTTP requests), Node.js relies on the Operating System itself. Modern kernels (Linux with epoll, macOS with kqueue, Windows with IOCP) are incredibly efficient at handling thousands of open network sockets at once.

When you make a network request, Node just registers interest with the OS and moves on. The OS handles the traffic. Node does literally zero work until the packet arrives.

2. The Worker Pool (File I/O & Crypto)

File systems are different. In many OSs, file operations are blocking. To get around this, Node.js uses a library called Libuv.

Libuv maintains a Thread Pool (default size: 4 threads) strictly for operations that cannot be done asynchronously by the OS. This includes:

- File System operations (

fs.readFile) - DNS lookups

- Compression (

zlib) - Crypto (

crypto.pbkdf2)

So when you read a massive file, Node is using multiple threads. It’s just hiding them from you.

Where It Breaks (The Heuristic)

This architecture is brilliant for I/O-heavy workloads (APIs, Gateways, Proxies). But it has a fatal flaw: CPU-bound tasks.

If the Waiter stops to chop the vegetables himself (calculate a Fibonacci sequence or resize an image), nobody gets their food. The entire restaurant halts.

The Code: Visualization

Here is a quick script to visualize the difference.

import crypto from 'node:crypto';

const start = Date.now();

function logTime(label: string) {

console.log(`${label}: ${Date.now() - start}ms`);

}

// 1. THE WAITER (Main Thread)

// This is synchronous. It BLOCKS everything.

logTime('Start Synchronous Hash');

crypto.pbkdf2Sync('password', 'salt', 100000, 512, 'sha512');

logTime('End Synchronous Hash'); // Notice the delay here

// 2. THE KITCHEN (Libuv Thread Pool)

// These run in parallel in the background threads.

const MAX_CALLS = 4; // Default pool size

for (let i = 0; i < MAX_CALLS; i++) {

crypto.pbkdf2('password', 'salt', 100000, 512, 'sha512', () => {

logTime(`Async Hash ${i + 1} Done`);

});

}

// 3. THE QUEUE

// This runs IMMEDIATELY because the async calls above didn't block.

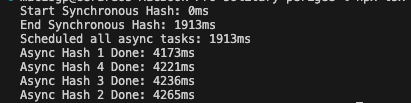

logTime('Scheduled all async tasks');What happens?

- The

Synchash halts the world. - The 4

Asynchashes start together. - The "Scheduled" log prints before the async hashes finish.

- The 4 async hashes finish at roughly the same time (because they run on 4 separate Libuv threads).

Senior Engineer Takeaway

Node.js scales because it delegates. It excels when your application is a manager, not a worker.

The Heuristic: If your Node.js service is doing math, image processing, or heavy parsing, you are using the wrong tool (or you need Worker Threads). If your service is gluing databases and APIs together, it’s unbeatable.

Related reading from my blog

- Demystifying JavaScript's Event Loop: A Comprehensive Guide

- How would you diagnose a memory leak in a production Node.js process?